-

PDF

- Split View

-

Views

-

Cite

Cite

Lara Alegre, Jose Sabater, Philip Best, Rafaël I J Mostert, Wendy L Williams, Gülay Gürkan, Martin J Hardcastle, Rohit Kondapally, Tim W Shimwell, Daniel J B Smith, A machine-learning classifier for LOFAR radio galaxy cross-matching techniques, Monthly Notices of the Royal Astronomical Society, Volume 516, Issue 4, November 2022, Pages 4716–4738, https://doi.org/10.1093/mnras/stac1888

Close - Share Icon Share

ABSTRACT

New-generation radio telescopes like LOFAR are conducting extensive sky surveys, detecting millions of sources. To maximize the scientific value of these surveys, radio source components must be properly associated into physical sources before being cross-matched with their optical/infrared counterparts. In this paper, we use machine learning to identify those radio sources for which either source association is required or statistical cross-matching to optical/infrared catalogues is unreliable. We train a binary classifier using manual annotations from the LOFAR Two-metre Sky Survey (LoTSS). We find that, compared to a classification model based on just the radio source parameters, the addition of features of the nearest-neighbour radio sources, the potential optical host galaxy, and the radio source composition in terms of Gaussian components, all improve model performance. Our best model, a gradient boosting classifier, achieves an accuracy of 95 per cent on a balanced data set and 96 per cent on the whole (unbalanced) sample after optimizing the classification threshold. Unsurprisingly, the classifier performs best on small, unresolved radio sources, reaching almost 99 per cent accuracy for sources smaller than 15 arcsec, but still achieves 70 per cent accuracy on resolved sources. It flags 68 per cent more sources than required as needing visual inspection, but this is still fewer than the manually developed decision tree used in LoTSS, while also having a lower rate of wrongly accepted sources for statistical analysis. The results have an immediate practical application for cross-matching the next LoTSS data releases and can be generalized to other radio surveys.

1 INTRODUCTION

The number of detected sources and the complexity of the structures in astronomical images has increased dramatically in recent years, with high-sensitivity telescopes surveying deeper but also wider areas of the sky. Radio astronomy has been at the forefront of this big data revolution, with telescopes like the LOw Frequency ARray (LOFAR; van Haarlem et al. 2013), the Very Large Array, and the Australian Square Kilometre Array Pathfinder Telescope (ASKAP; Hotan et al. 2021). These have been conducting wide radio continuum surveys, such as the LOFAR Two-meter Sky Survey (LoTSS; Shimwell et al. 2017, 2019, 2022), the VLA Sky Survey (VLASS; Lacy et al. 2020), and the Rapid ASKAP Continuum Survey (RACS; Hale et al. 2021), and the Evolutionary Map of the Universe (EMU; Norris et al. 2011), respectively. When completed, these surveys will have covered both hemispheres and discovered tens of millions of radio sources. This brings radio astronomy into a revolutionary new era: large samples enable detailed statistical studies whilst probing the unexplored Universe at these wavelengths (see Norris 2017 for a review). In addition to producing scientific results, these surveys are also developing technology in preparation for the upcoming Square Kilometer Array (SKA; Dewdney et al. 2009), which will be the world’s most powerful radio telescope. The SKA will generate massive amounts of data and is expected to detect billions of radio sources.

In order to extract the full scientific return from these surveys, it is essential to cross-match the objects detected at radio wavelengths to their counterparts at other wavelengths, particularly optical and near-infrared. This allows us to identify the host galaxies, classify the radio sources according to their morphology, black hole activity, and other characteristics, and derive basic physical properties such as redshifts, luminosities and stellar masses (e.g. Best et al. 2005; Smolčić et al. 2017; Duncan et al. 2019; Gürkan et al. 2022). The cross-identification of radio galaxies with their optical (or infrared) counterparts is a complex process due to the extended and multicomponent nature of many radio sources, as well as the mismatch in the angular resolution between the radio and optical surveys. Traditionally, it has relied mostly on statistical methods, visual analysis, or a combination of the two (see Williams et al. 2019, hereafter referred as W19, for a discussion).

In early continuum radio surveys, the sources detected were mainly bright active galactic nuclei (AGNs); only a small proportion of these had counterparts in the all-sky optical imaging data available at that time, but the samples were small enough that dedicated deep optical imaging of individual sources could be coupled with visual analysis (e.g. Laing, Riley & Longair 1983). By the turn of the century, a statistical comparison of the Faint Images of the Radio Sky at Twenty centimetres survey (FIRST; Becker, White & Helfand 1995) with the large-area optical imaging from the Sloan Digital Sky Survey (SDSS; York et al. 2000) provided optical identifications for around 30 per cent of the ∼105 radio source host galaxies (Ivezić et al. 2002). Recent radio surveys have been revealing still fainter sources, including higher fractions of star-forming galaxies (SFGs) that begin to dominate over AGNs at low flux densities . At the same time, deeper optical and near-infrared observations are now available over large sky areas, such as imaging from the Panoramic Survey Telescope and Rapid Response System (Pan-STARRS-1) survey (Chambers et al. 2016) or the Dark Energy Spectroscopic Instrument (DESI) Legacy survey (Dey et al. 2019), with even deeper and wider imaging expected in the coming years from the Large Survey of Space and Time (LSST; Ivezić et al. 2019) and the Euclid Space Telescope surveys (Laureijs et al. 2011). These surveys increase both the fraction of radio sources with optical counterparts and the number of potentially confusing foreground or background sources. The simultaneous increase of possible matches and data volumes requires improvement in the current cross-matching techniques.

In LoTSS, the source density is already more than a factor of 10 times higher than in the existing widely used large-area radio continuum surveys such as the National Radio Astronomy Observatory (NRAO) VLA Sky Survey (NVSS; Condon et al. 1998), the FIRST survey, the Sydney University Molonglo Sky Survey (SUMSS; Bock, Large & Sadler 1999), and the Westerbork Northern Sky Survey (WENSS; Rengelink et al. 1997). LoTSS detected more than 300 000 sources in its first data release, containing just the first 2 per cent of the survey (LoTSS DR1; Shimwell et al. 2019), and a second data release with almost 4.4 million sources covering 5634 deg2, 27 per cent of the northern sky, has just been published (LoTSS DR2; Shimwell et al. 2022).

In LoTSS DR1, the radio sources were cross-matched with optical and near-infrared surveys, Pan-STARRS1 DR1 (Chambers et al. 2016) and the AllWISE catalogue (Cutri et al. 2013), respectively, and an optical and/or near-infrared counterpart was identifiable for 73 per cent of the LoTSS sources (W19). Compact sources, such as SFGs or compact AGNs, were cross-matched using the Likelihood Ratio technique (LR; e.g. Richter 1975; Willis & de Ruiter 1977; Sutherland & Saunders 1992; Ciliegi et al. 2003) which assesses the relative probability of a given optical source being a true counterpart against a randomly aligned optical object, based on source properties (for LoTSS DR1, the LR assessment considered both the magnitude and colour of the potential host galaxy; see Nisbet 2018, W19). This statistical method is reliable when the flux-weighted mean position of the radio emission is an accurate estimate of the location at which the radio source originates, and is therefore coincident with the optical emission. However, more extended sources cannot yet be reliably handled through these statistical methods. Furthermore, for radio sources with emission that is extended and/or split into different radio components (e.g. double-lobed sources), source detection algorithms often fail to correctly group together the multiple radio components into a single source, generating independent entries in the radio catalogues. In other cases, the source finder can incorrectly group individual physical radio sources together into a single-blended detection. Thus, radio catalogues are not always a true description of the physical sources, leading to further inaccuracies if statistical techniques are naively applied. In LoTSS DR1, these complex-structured, multicomponent and blended sources were therefore visually cross-matched alongside manual component association or dissociation.

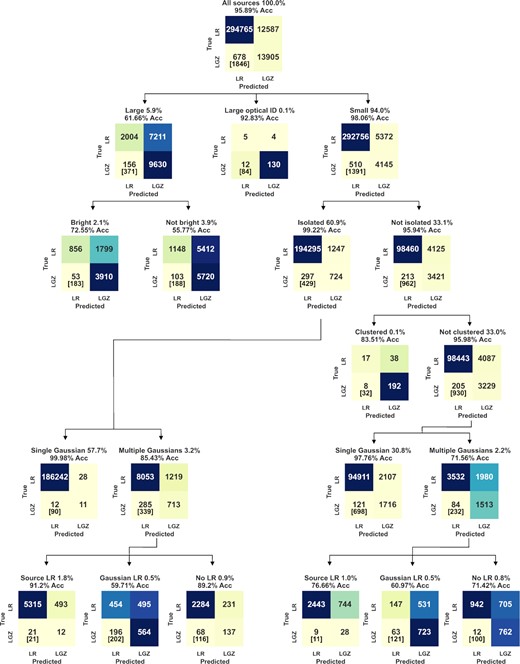

In order to discriminate between sources that require visual analysis and those that can be reliably cross-matched using the LR technique, W19 designed a decision tree based on the properties of the radio sources and their cross-ID LR values. This decision tree selected nearly 30 000 sources for visual inspection, corresponding to around 10 per cent of the total LoTSS DR1 sample. This was a conservative selection process, and indeed post-analysis (i.e. the examination of which ones actually required visual inspection, explained in Section 2) shows that only just over half of these sources actually required to be inspected. LoTSS DR2 covers an area almost 15 times larger than DR1, with a higher fraction of counterparts expected due to the use of the (deeper) Legacy data set for cross-matching. The large number of sources makes visual inspection very challenging for more than a small fraction of the sources, while the ultimate goal is to replace all visual analysis with automated techniques, a more practical and immediate step is to minimize the amount of unnecessary inspection.

Some progress has been made to improve the current statistical methods, for example by modifying the LR technique to tackle the blending problem (Weston et al. 2018), by replacing the LR by Bayesian approaches (Fan et al. 2015, 2020; Mallinar, Budavári & Lemson 2017), or by applying machine-learning (ML) techniques (e.g. Alger et al. 2018). Various efforts have also been made to improve the cross-matching process for the extended/multicomponent radio sources, using a ridgeline approach (Barkus et al. 2022) and deep learning techniques mainly based on Convolutional Neural Networks (CNNs), for example to group radio source components (Mostert et al., in preparation) or to find the host galaxy in previously-selected sources with multiple radio components (Alger et al. 2018). CNNs have also been used to improve the source finding and identification (e.g. Vafaei Sadr et al. 2019), or for automatic source extraction and further morphology classification (e.g. Wu et al. 2019). Deep learning has been particularly successful in automating radio galaxy morphology classification of (previously associated) multicomponent sources using CNNs (e.g. Aniyan & Thorat 2017; Lukic et al. 2018, 2019; Alhassan, Taylor & Vaccari 2018), using transfer learning (Tang, Scaife & Leahy 2019) and using clustering methods (Galvin et al. 2020; Mostert et al. 2021) combined, for example, with Haralick features (Ntwaetsile & Geach 2021). However, deep learning models, which perform feature extraction from the images before classification, require a higher number of annotated examples to train, and are also more difficult to interpret and to adapt than simpler ML models. In addition, a variety of unforeseen limitations due to limited experimentation in radio astronomy can further introduce different biases. Some examples include issues related to the use of fixed-size data images (Mostert et al. 2021) or even the image input file format (Tang et al. 2019). Furthermore, none of these methods can yet perform reliable source association and fully cross-match extended and multicomponent sources. To date, the full cross-matching of modern large radio surveys has been only achieved through citizen science projects [e.g. Radio Galaxy Zoo (RGZ), Banfield et al. 2015] and extensive science team efforts [e.g. LOFAR Galaxy Zoo (LGZ), W19; Kondapally et al. 2021].

In this work, we propose a gradient boosting classifier (GBC) to identify which radio sources can be reliably cross-matched using the LR technique, or instead require visual inspection. We use supervised ML algorithms, which offer greater intuitive interpretation and are simpler to adjust and analyse than deep learning models. The model adopted is an ensemble of decision trees, and it was selected and optimized using Automated Machine Learning (AutoML; see Appendix A and He, Zhao & Chu 2021 for a review). While individual decision trees have been used in radio galaxy classification in the past (e.g. Proctor 2016), ensembles of decision trees have been proven to achieve better performance (Dietterich 2000). Examples of the use of ensembles of decision trees in radio astronomy include the classification of blazars using multiwavelength data (Arsioli & Dedin 2020) and the estimation of physical properties of radio sources such as redshifts (Luken et al. 2022).

We build a data set based on LoTSS DR1, which provides more than 300 000 annotated examples, and select a set of relevant features, allowing the model to successfully classify unseen sources with an accuracy of 94.6 per cent and select the ones that can be cross-matched by LR with a precision of 96.3 per cent. This helps to limit the manual analysis to the most complex sources (extended sources, sources with multiple components or blended detections), which are those for which the LR method is not successful. The results of this study are already being incorporated, by helping to identify unrelated radio components, into the automatic component association of sources larger than 15 arcsec from LoTSS DR1 (Mostert et al., in preparation). Furthermore, the methods applied in LoTSS DR1 are directly transferable to other parts of the LoTSS survey since the techniques used for processing and cross-matching the next data releases are broadly similar. Therefore, our work has immediate practical benefit for deciding which sources require visual analysis in LoTSS DR2 (Hardcastle et al. in preparation).

The paper is organized as follows. In Section 2, we describe the LoTSS DR1 data and in Section 3 we explain how these data were used to create a data set suitable for our ML classification problem. Section 4 refers to the experiments performed to select and optimize the model, including the specifications of the model adopted. The model performance and interpretation are explained in Section 5. In Section 6, we interpret the results of the model applied to the full LoTSS data sets, discussing the implications and comparing them against the methods currently used. The conclusions and a discussion of their significance for the next LoTSS data releases can be found in Section 7.

2 DATA

The data used in this work consist of LoTSS DR1 (Shimwell et al. 2019)1 radio catalogues that were derived from the 58 mosaic images of DR1, which cover 424 deg2 over the Hobby–Eberly Telescope Dark Energy Experiment (HETDEX; Hill et al. 2008) Spring Field (right ascension 10h45m00s – 15h30m00s and declination 45°00′00″– 57°00′00″). LoTSS has a frequency coverage from 120 to 168 MHz, and achieves a typical rms noise level of 70 |$\mu$|Jy beam−1 over the DR1 region, with estimated point source completeness of 90 per cent at a flux density of 0.45 mJy. LOFAR’s low frequencies combined with high sensitivity on short baselines gives it high efficiency at detecting extended radio emission. LoTSS DR1 has an angular resolution of 6 arcsec and an astrometric precision of 0.2 arcsec, making it robust for host-galaxy identification.

In LoTSS DR1, the source detection was performed using the Python Blob Detector and Source Finder (pybdsf; Mohan & Rafferty 2015), where a total of 325 694 pybdsf sources were extracted with a peak detection above 5σ. pybdsf fits Gaussians to pixel islands assigning one or multiple Gaussians to each pybdsf source. The radio catalogues with the pybdsf properties for both the sources and the Gaussians include positions, angular sizes and orientations, peak and integrated flux density as well as statistical errors.

pybdsf sources do not always represent true radio sources (i.e. physically connected sources). Some of the radio components of extended sources may appear as separated and unrelated pybdsf sources, which need to be associated together into the same source in post-processing. We refer to these as multicomponent sources in the rest of the paper and they account for 2.8 per cent of LoTSS DR1. In other cases, Gaussians may be incorrectly grouped into one pybdsf source when they are actually distinct physical sources. In this case, we refer to them as blended sources and they make up only 0.3 per cent of LoTSS DR1. In the vast majority of cases (96.9 per cent in LoTSS DR1), however, pybdsf correctly associates the radio emission into true physical sources. We refer to these hereafter as single sources. These are, in most cases, compact sources composed of only one Gaussian, but can also be extended sources composed by various Gaussians (hence our definition of singles is not the same as the ‘S’ code from the pybdsf software used in W19). Even for these correctly associated sources, however, cross-matching with other surveys using statistical means alone can fail due to an incorrect (or missed) counterpart identification, especially if the source is extended and/or asymmetric. This is the case for 1.8 per cent of the sources of LoTSS DR1.

In order to enhance science quality, as part of LoTSS DR1, considerable effort was undertaken to properly associate the radio source components (or dissociate blended sources) and get the correct optical/near-infrared counterparts (W19). For the majority of LoTSS DR1 sources, pybdsf correctly associates source components and outputs an accurate estimate of the position and radio source properties, and therefore such sources were cross-matched statistically using LR. However, complex sources with multiple components or extended emission, and incorrectly blended sources, were sent to visual inspection. This was carried out on a private LOFAR Galaxy Zoo (LGZ) project, hosted on the Zooniverse platform,2 in which each source was inspected by at least 5 collaborators of the LOFAR consortium. The selection of the sources to be analysed in LGZ was done using a decision tree (also referred to as flowchart) built using the characteristics of the pybdsf sources and Gaussians, the neighbouring sources, and the LR of any optical/IR cross-matching (see W19).

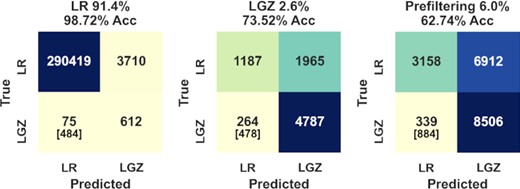

The decision tree generates three main outcomes: the source association and/or identification requires LGZ; the source has been correctly catalogued by pybdsf and the cross-identification (or lack of) can be made by LR; and the source is sent to a quick visual sorting (prefiltering), where one expert inspects the source and redirects it to one of the other two categories or identifies it as an artefact. A summary of the number of sources in each of these categories is given in Table 1, where we include in the prefiltering category 223 sources with large optical IDs that were automatically matched to a nearby (large angular size) SDSS or 2MASX galaxy since they were afterwards visually confirmed. We further exclude 2591 pybdsf sources identified by W19 as artefacts, except for one source which was automatically marked by the decision tree as an artefact but was instead noted during the LGZ process to be a genuine source. In LoTSS DR1, the artefacts were either removed in an initial stage of the selection process (the majority by being in the proximity of bright sources; 31 per cent) or by visual inspection (mainly during the prefiltering step; 55 per cent). In the next, LoTSS releases the improved calibration and imaging pipeline for the radio data (Tasse et al. 2021) means that we expect a lower proportion of artefacts, most of which will be clearly identifiable and removed at early stages. Furthermore, the properties of any remaining artefacts may be different due to calibration changes. For these reasons, we exclude the artefacts when constructing the ML classifier and analysing the results; our final data catalogue therefore contains 323 103 pybdsf sources. The values quoted in Table 1 refer to pybdsf sources and are different to the ones presented on table 5 of W19 that summarizes the total number of sources after component association or dissociation.

For each of the main categories (LR, LGZ, and prefiltering) classified by the W19 decision tree, the table gives the number of sources that were suitable for LR and the number that required visual analysis, as determined using the final outcomes after visual inspection. The final column indicates the percentage of the time that the flowchart decision was correct (i.e. the proportion of sources that were assigned correctly to each of the categories).

| W19 . | Total . | No. suitable . | No. requiring . | Percentage . |

|---|---|---|---|---|

| decision . | number . | for LR . | visual analysis . | correct . |

| LR | 295 225 | 294 129 | 1096a | 99.63 |

| LGZ | 8195 | 3144 | 5051 | 61.64 |

| Prefiltering | 19 683 | 10 079 | 9604 | 48.79 |

| Total | 323 103 | 307 352 | 15 751 | 95.57 |

| W19 . | Total . | No. suitable . | No. requiring . | Percentage . |

|---|---|---|---|---|

| decision . | number . | for LR . | visual analysis . | correct . |

| LR | 295 225 | 294 129 | 1096a | 99.63 |

| LGZ | 8195 | 3144 | 5051 | 61.64 |

| Prefiltering | 19 683 | 10 079 | 9604 | 48.79 |

| Total | 323 103 | 307 352 | 15 751 | 95.57 |

aThe 1096 sources selected by the decision tree for LR, but identified as requiring visual analysis, represent a lower limit to the true number as these were only identified when they were part of multicomponent sources for which other components were sent to LGZ (see Section 6.2 for further discussion of this).

For each of the main categories (LR, LGZ, and prefiltering) classified by the W19 decision tree, the table gives the number of sources that were suitable for LR and the number that required visual analysis, as determined using the final outcomes after visual inspection. The final column indicates the percentage of the time that the flowchart decision was correct (i.e. the proportion of sources that were assigned correctly to each of the categories).

| W19 . | Total . | No. suitable . | No. requiring . | Percentage . |

|---|---|---|---|---|

| decision . | number . | for LR . | visual analysis . | correct . |

| LR | 295 225 | 294 129 | 1096a | 99.63 |

| LGZ | 8195 | 3144 | 5051 | 61.64 |

| Prefiltering | 19 683 | 10 079 | 9604 | 48.79 |

| Total | 323 103 | 307 352 | 15 751 | 95.57 |

| W19 . | Total . | No. suitable . | No. requiring . | Percentage . |

|---|---|---|---|---|

| decision . | number . | for LR . | visual analysis . | correct . |

| LR | 295 225 | 294 129 | 1096a | 99.63 |

| LGZ | 8195 | 3144 | 5051 | 61.64 |

| Prefiltering | 19 683 | 10 079 | 9604 | 48.79 |

| Total | 323 103 | 307 352 | 15 751 | 95.57 |

aThe 1096 sources selected by the decision tree for LR, but identified as requiring visual analysis, represent a lower limit to the true number as these were only identified when they were part of multicomponent sources for which other components were sent to LGZ (see Section 6.2 for further discussion of this).

Using the decision tree, W19 initially classified 91.37 per cent of the sources (295 225) as being suitable for LR analysis (see Table 1) and 8.63 per cent (27 878 sources) as requiring visual inspection (either prefiltering or LGZ). These numbers correspond to sources after removal of artefacts. After visual analysis and processing of the final DR1 data, in hindsight, the conclusion is (see Section 3.1) that 95.13 per cent (307 352) could be cross-matched using LR and 4.87 per cent (15 751) required visual inspection. For the sources that were sent directly to LGZ (8195 pybdsf sources), an examination of the final LGZ decision indicates that 5051 of them (61.64 per cent) were not correctly associated by pybdsf and therefore, could not have had their optical identification assigned statistically by LR (or lack of identification in case of no LR match). Similarly, the prefiltering step corresponds to 19 683 pybdsf sources for which 9604 pybdsf sources (48.79 per cent) could not have been processed using LR. In contrast, from the 295 225 pybdsf sources selected as suitable for cross-matching with LR, 294 129 of them (99.63 per cent) retain the LR cross-match in the final catalogue. In reality, the number of these that are correct will actually be marginally lower since these sources were not subjected to visual examination unless they were part of a multicomponent source (usually the core of a radio source) for which one of the source components was sent to visual analysis. This was the method through which the 1096 sources, sent by the decision tree to LR but which required visual analysis, were discovered. We discuss this in more detail in Section 6.2.

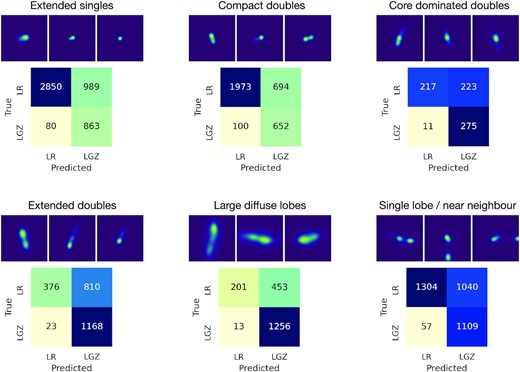

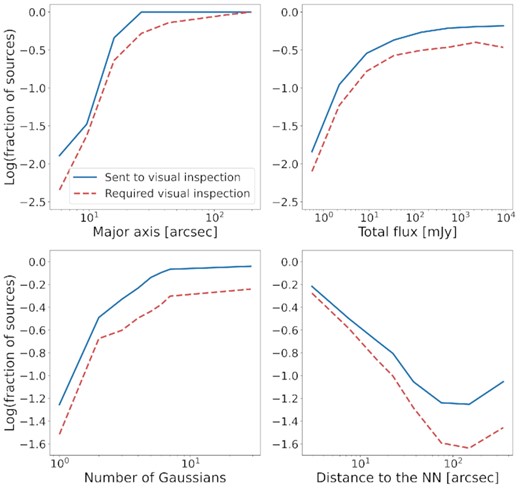

It is evident from Table 1 that overall the W19 decision tree has a high accuracy (95.57 per cent). This is mainly because most of the sources are compact and can be cross-matched by LR (where the application of statistical methods results in very high precision). However, the decision tree places about twice as many sources in to the LGZ and prefiltering categories as required, increasing the burden on visual analysis. Fig. 1 illustrates the dependence of the decision tree outcomes on some key pybdsf source properties: the major axis length, the total radio flux density, the number of Gaussians that compose a pybdsfsource, and the distance of each pybdsf source to its nearest neighbour (NN). In each panel, the blue line shows the fraction of sources that were sent to visual inspection, and the red dashed line shows the fraction of sources that actually needed to be inspected, as determined from the final cross-matched catalogues incorporating the LGZ outcomes. The plots show that the fraction sent for visual analysis increases with increasing source size (note that 15 arcsec was the limit used by W19 to distinguish between ‘small’ and ‘large’ sources, with all the large sources being visually inspected, either directly in LGZ or during the prefiltering stage), increasing flux density, increasing number of Gaussian components, and decreasing distances to the NN. These are in line with expectations, as they are all indications that a given source is more likely to be extended and complex. Interestingly, in all cases the red lines are broadly scaled down from the fractions sent to LGZ by about a factor of 2 with no strong parameter dependencies (fluctuations range only from around 1.5–2.5 across the parameter space). This indicates that it would not be straightforward to improve the decision tree outcomes simply by adjusting these parameter values.

Fraction of pybdsf sources sent to visual inspection by the W19 decision tree (blue lines) and the ones that actually required to be inspected (as determined from the final visual inspection outcomes; red dashed lines) as a function of different source parameters: major axis length, total flux density, total number of Gaussians that compose each pybdsf source, and distance of each pybdsf to its nearest neighbour (NN) pybdsf source.

3 DATA SET

In supervised ML, models are learned from a set of labelled examples drawn from the data set. The goal is to predict to which class a previously unseen example belongs based on the value of its features. The data set is a key input for training the ML model and relies upon an adequate and well-profiled number of examples. We create our data set by evaluating all 323 103 pybdsf sources from LoTSS DR1 based on their individual characteristics and assigning them to different classes (Section 3.1). We create different sets of features by using radio source parameters and optical information (Section 3.2), and we address the class imbalance problem by exploring different ways of balancing the data set (Section 3.3). The impact of these last two factors on the classification is investigated further in Section 4.

3.1 Classes

To create the classes, we first evaluate each pybdsf source (after the results of any deblending or LGZ source association) and assigned them an ‘association flag’ according to different outcomes: the ones that were neither deblended nor associated with other pybdsf sources (singles, flag 1); sources that were deblended (blends, flag 2); and pybdsf sources that were grouped with other pybdsf sources (multicomponents, flag 4). Note that a small number of sources have a combination of flags, since they were first deblended and afterwards one or more of the deblended components was grouped with another pybdsf source (leading to flag 6).

To create these outcomes, the correspondence between each pybdsf source and the final radio source association (or lack of association) was assessed using the pybdsf radio source catalogue from Shimwell et al. (2019) and the final value-added catalogue (source associations and optical IDs) from W19. pybdsf sources that were grouped with other pybdsf sources appear as components of a radio source in the corresponding component catalogue, and pybdsf sources that were deblended appear as two or more radio sources.

To create a final diagnosis, we also inspected the ‘single’ sources (i.e. the ones with the association flag 1) in order to evaluate whether the LR was a suitable method to identify the host galaxy. This is the case for those sources where the final ID in the value-added catalogue is the same as would have been drawn through LR analysis, or where there was no ID in the final catalogue and the LR analysis also predicted no ID. In contrast, if visual analysis resulted in a change in optical ID (or a change from having no LR ID to having an ID, or vice versa) then these sources are not suitable for cross-matching using the LR method. As a result of this evaluation, the sources were assigned into two classes (denoted by the flag ‘accept|$\_$|lr’ throughout this work):

Class 1:pybdsf sources that were not associated with other pybdsf sources, and were not deblended, and for which LR gave the same outcome as was finally accepted in the value-added catalogue (i.e. same host galaxy ID, or correctly gave no ID). These sources would be suitable for LR analysis.

Class 0:pybdsf sources that were either associated with other pybdsf sources in LGZ, or deblended into more than one source, or LR would obtain an incorrect ID. These sources are all unsuitable for analysis by LR alone.

The classes comprise 307 352 sources suitable for LR (class 1) and 15 751 that require visual analysis (class 0); from the latter 9072 are multiple component pybdsf sources, 857 are blended pybdsf sources, and 5822 are single sources for which a simple application of LR would produce an incorrect ID. Artefacts (which we exclude from the analysis) correspond to pybdsf sources that are not in the final DR1 value-added catalogue.

3.2 Features

As input features for the ML classifier we used radio source parameters along with properties of the LR matches for both the pybdsf source being considered and its nearest neighbour (NN). We discuss these below and list them in Table 2.

List of features used in the analysis. These were selected or calculated using different LoTSS DR1 catalogues*. The LR threshold value adopted in LoTSS DR1 (Lthr = 0.639) was used to scale LR value features (these have the suffix tlv). Features in logarithmic scale appear with the log prefix. Sources refer to pybdsf sources.

| Features . | Definition and origin . |

|---|---|

| Baseline (BL) | |

| Maj | Source major axis (arcsec)a |

| Min | Source minor axis (arcsec)a |

| Total_flux | Source integrated flux density (mJy)a |

| Peak_flux | Source peak flux density (mJy bm-1)a |

| log|$\_$|n|$\_$|gauss | No. Gaussians that compose a sourceb |

| Likelihood ratio (LR) | |

| log|$\_$|lr|$\_$|tlv | Log10(source LR value match/Lthr)c |

| lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Gaussians (GAUS) | |

| gauss|$\_$|maj | Gaussian major axis (arcsec)b |

| gauss|$\_$|min | Gaussian minor axis (arcsec) b |

| gauss|$\_$|flux|$\_$|ratio | Gaussian/source flux ratioa, b |

| log|$\_$|gauss|$\_$|lr|$\_$|tlv | Log10(Gaussian LR/Lthr)c |

| gauss|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| log|$\_$|highest|$\_$|lr|$\_$|tlv | Log10(source or Gaussian LR/Lthr)c |

| Nearest neighbour (NN) | |

| NN|$\_$|45 | No. of sources within 45″a |

| NN|$\_$|dist | Distance to the NN (arcsec)a |

| NN|$\_$|flux|$\_$|ratio | NN flux/source flux density ratioa |

| log|$\_$|NN|$\_$|lr|$\_$|tlv | Log10(LR value of the NN/Lthr)c |

| NN|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Closest prototype (SOM) | |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x1 | cos (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x2 | sin (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y1 | cos (2π Closest|$\_$|prototype|$\_$|y/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y2 | sin (2π Closest|$\_$|prototype|$\_$|y/10)d |

| Features . | Definition and origin . |

|---|---|

| Baseline (BL) | |

| Maj | Source major axis (arcsec)a |

| Min | Source minor axis (arcsec)a |

| Total_flux | Source integrated flux density (mJy)a |

| Peak_flux | Source peak flux density (mJy bm-1)a |

| log|$\_$|n|$\_$|gauss | No. Gaussians that compose a sourceb |

| Likelihood ratio (LR) | |

| log|$\_$|lr|$\_$|tlv | Log10(source LR value match/Lthr)c |

| lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Gaussians (GAUS) | |

| gauss|$\_$|maj | Gaussian major axis (arcsec)b |

| gauss|$\_$|min | Gaussian minor axis (arcsec) b |

| gauss|$\_$|flux|$\_$|ratio | Gaussian/source flux ratioa, b |

| log|$\_$|gauss|$\_$|lr|$\_$|tlv | Log10(Gaussian LR/Lthr)c |

| gauss|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| log|$\_$|highest|$\_$|lr|$\_$|tlv | Log10(source or Gaussian LR/Lthr)c |

| Nearest neighbour (NN) | |

| NN|$\_$|45 | No. of sources within 45″a |

| NN|$\_$|dist | Distance to the NN (arcsec)a |

| NN|$\_$|flux|$\_$|ratio | NN flux/source flux density ratioa |

| log|$\_$|NN|$\_$|lr|$\_$|tlv | Log10(LR value of the NN/Lthr)c |

| NN|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Closest prototype (SOM) | |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x1 | cos (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x2 | sin (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y1 | cos (2π Closest|$\_$|prototype|$\_$|y/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y2 | sin (2π Closest|$\_$|prototype|$\_$|y/10)d |

List of features used in the analysis. These were selected or calculated using different LoTSS DR1 catalogues*. The LR threshold value adopted in LoTSS DR1 (Lthr = 0.639) was used to scale LR value features (these have the suffix tlv). Features in logarithmic scale appear with the log prefix. Sources refer to pybdsf sources.

| Features . | Definition and origin . |

|---|---|

| Baseline (BL) | |

| Maj | Source major axis (arcsec)a |

| Min | Source minor axis (arcsec)a |

| Total_flux | Source integrated flux density (mJy)a |

| Peak_flux | Source peak flux density (mJy bm-1)a |

| log|$\_$|n|$\_$|gauss | No. Gaussians that compose a sourceb |

| Likelihood ratio (LR) | |

| log|$\_$|lr|$\_$|tlv | Log10(source LR value match/Lthr)c |

| lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Gaussians (GAUS) | |

| gauss|$\_$|maj | Gaussian major axis (arcsec)b |

| gauss|$\_$|min | Gaussian minor axis (arcsec) b |

| gauss|$\_$|flux|$\_$|ratio | Gaussian/source flux ratioa, b |

| log|$\_$|gauss|$\_$|lr|$\_$|tlv | Log10(Gaussian LR/Lthr)c |

| gauss|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| log|$\_$|highest|$\_$|lr|$\_$|tlv | Log10(source or Gaussian LR/Lthr)c |

| Nearest neighbour (NN) | |

| NN|$\_$|45 | No. of sources within 45″a |

| NN|$\_$|dist | Distance to the NN (arcsec)a |

| NN|$\_$|flux|$\_$|ratio | NN flux/source flux density ratioa |

| log|$\_$|NN|$\_$|lr|$\_$|tlv | Log10(LR value of the NN/Lthr)c |

| NN|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Closest prototype (SOM) | |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x1 | cos (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x2 | sin (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y1 | cos (2π Closest|$\_$|prototype|$\_$|y/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y2 | sin (2π Closest|$\_$|prototype|$\_$|y/10)d |

| Features . | Definition and origin . |

|---|---|

| Baseline (BL) | |

| Maj | Source major axis (arcsec)a |

| Min | Source minor axis (arcsec)a |

| Total_flux | Source integrated flux density (mJy)a |

| Peak_flux | Source peak flux density (mJy bm-1)a |

| log|$\_$|n|$\_$|gauss | No. Gaussians that compose a sourceb |

| Likelihood ratio (LR) | |

| log|$\_$|lr|$\_$|tlv | Log10(source LR value match/Lthr)c |

| lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Gaussians (GAUS) | |

| gauss|$\_$|maj | Gaussian major axis (arcsec)b |

| gauss|$\_$|min | Gaussian minor axis (arcsec) b |

| gauss|$\_$|flux|$\_$|ratio | Gaussian/source flux ratioa, b |

| log|$\_$|gauss|$\_$|lr|$\_$|tlv | Log10(Gaussian LR/Lthr)c |

| gauss|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| log|$\_$|highest|$\_$|lr|$\_$|tlv | Log10(source or Gaussian LR/Lthr)c |

| Nearest neighbour (NN) | |

| NN|$\_$|45 | No. of sources within 45″a |

| NN|$\_$|dist | Distance to the NN (arcsec)a |

| NN|$\_$|flux|$\_$|ratio | NN flux/source flux density ratioa |

| log|$\_$|NN|$\_$|lr|$\_$|tlv | Log10(LR value of the NN/Lthr)c |

| NN|$\_$|lr|$\_$|dist | Distance to the LR ID match (arcsec)c |

| Closest prototype (SOM) | |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x1 | cos (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|x2 | sin (2π Closest|$\_$|prototype|$\_$|x/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y1 | cos (2π Closest|$\_$|prototype|$\_$|y/10)d |

| 10x10|$\_$|closest|$\_$|prototype|$\_$|y2 | sin (2π Closest|$\_$|prototype|$\_$|y/10)d |

The radio features were built from the pybdsf catalogue from Shimwell et al. (2019), where each pybdsf source has an identifier (source name) with the corresponding radio properties; here, we use the major and minor axis sizes and the peak and total flux densities. In addition to these basic radio properties, we used the LR value of the best match and the distance to this match. We computed the LR values for the pybdsf sources and for each of the Gaussians that comprise a pybdsf source in the same way as described in W19, with minor modifications that resulted from improvements of the original code (Kondapally et al. 2021).

We also used the Gaussian component catalogue (described in Shimwell et al. 2019), which contains the radio information for all the Gaussians that compose each pybdsf source. We use the number of Gaussian components comprising a source (indicative of the morphological complexity of the source), and also use the properties (major and minor axis size, fractional source flux density, and LR match properties) of the Gaussian with the highest LR value, or of the brightest Gaussian if the LR of all Gaussian components is below the LoTSS DR1 LR threshold adopted in W19.

We also used the radio and LR properties of the NN source. In addition, we computed the number of radio sources within 45 arcsec (used as an estimate of the local source density, which might be indicative of the presence of multicomponent sources) and the flux ratio between the source and its NN.

Finally, we investigated using the positions of the LoTSS DR1 sources on a cyclic Self-Organizing Map (SOM; Mostert et al. 2021) as input features. The SOM provides information of the different LoTSS DR1 morphological source ‘prototypes’ on a two-dimensional grid.

In ML, the quality of the features affects the ability of the model to learn. In order to feed useful features that can be more easily interpreted by the algorithm, we made the following transformations to the data:

We searched the catalogues for missing values (e.g. LR values where there was no potential host within the 15 arcsec search radius) which we assigned extreme values (e.g. a very low arbitrary value of 10−135 in the case of LR), even though the tree models adopted in Section 4 can in general handle missing data well.

We used the log value of the number of Gaussians, since complex sources can be made of dozens of Gaussians (up to 51 in LoTSS DR1).

We encode the values of the SOM morphological prototypes into cyclical features. The prototypes are located on a square grid with (x,y) coordinates. Each radio source is mapped to the prototype of the SOM that it most resembles. We transformed the corresponding (x,y) coordinates by using a sine and a cosine transform: this creates 2 new features from each of the original ones, but ensures the cyclical nature of the SOM is retained. We set the values of the prototypes to an arbitrary high value of 1020 when the source is not available.

We set the value of the LR to a log scale, although this choice has no effect on our results (decision tree models, which we adopt in Section 4, are not sensitive to feature transformations). Using a log scale allows this feature to be used interchangeably with different classifiers (e.g. neural networks).

We create a feature which uses either the LR of the source or the LR of the Gaussian component with the highest LR value if the LR of the source is lower than the LR of one of the Gaussians that make up the source. This is more indicative of a LR match when the source is composed by multiple Gaussians, one of which traces the radio core (and it is the same if the source is only composed by one Gaussian). This can also be indicative of a blended source, especially if the source LR value is below the LR threshold.

We further scaled the LR values by dividing them by the LR threshold value used to process the sources in the HETDEX field (only sources for which the match had a LR value higher than 0.639 got an ID or no ID via this method). This has the advantage of making the model appropriate for future LoTSS fields that might use different optical/near-IR data sets with a correspondingly different LR threshold.

3.3 Balancing the data set

The number of objects in the two classes created previously is heavily imbalanced: class 1 has 307 352 sources while class 0 comprises 15 751 objects. The major problem with imbalanced data sets is the tendency of the model to get specialized in the class with more examples (i.e. to overfit to class 1). For that reason, we explore different ways of creating a balanced data set by under- and oversampling (cf. Collell, Prelec & Patil 2018, and references therein).

We performed undersampling of the majority class by extracting a random sample of 15 751 objects from class 1 (which is the number of sources available in class 0). Undersampling is the standard method adopted throughout the experiments (Section 4); we use 31 502 sources, comprising the same number of examples in both classes.3 In these experiments, we used a training set (used to train the model) of 75 per cent of the data set, and a test set (used to evaluate the model) of 25 per cent. When performing model selection and optimization (see Section 4.4) we use a 10-fold cross-validation (CV), otherwise we test and train the models on 10 different randomly sampled data sets and use the mean value as the model performance.

Since both under- and oversampling have the potential to affect performance, we conducted experiments to determine which method was the best. We created a synthetic training data set with ADASYN (He et al. 2008), an adaptive sampling technique that is used to generate synthetic examples of the minority class (class 0) by using the original density distribution of the sources in this class. To avoid data leakage, we re-sampled only 75 per cent of the minority class (11 841 sources) and tested on a test set comprising the remaining 25 per cent of these sources (which is balanced as well). The number of sources in the training set before and after re-sampling is 303 386:303 386 for class 1 and 11 841:301 738 for class 0, respectively. We compare the performance using the model trained using under- and oversampling in Section 4.4.2.

Finally, it should be emphasized that although both under- and oversampling techniques aim to create a balanced data set that can generalize well for the two different classes, the distribution of the sources is inherently highly imbalanced and objects that need to be visually inspected are relatively rare in LoTSS (and other deep radio surveys). For that reason, when applying the model trained on a balanced data set to the real (imbalanced) data, which we do in Section 6, other factors require consideration; we discuss these in detail in Section 6.1.

4 EXPERIMENTS

We start by defining in Section 4.1 the metrics that will be used to evaluate the performance of the classifier. In Section 4.2, we create a baseline model for the experiments. This is a less complex yet still effective model that produces acceptable results but has room for improvement. The baseline model was selected using the Tree-Based Pipeline Optimization Tool (tpot; Olson et al. 2016b), and consists of a GBC; see Appendix A for an overview of the ML models and AutoML tools used. In order to improve model performance, we examine the impact of adding different sets of features in Section 4.3, and optimizing the model hyperparameters in Section 4.4.

4.1 Performance metrics

Accuracy is the most common metric to evaluate the performance of an ML classifier. Accuracy can be given as the percentage of the correctly classified inputs relative to the overall classifications: accuracy = (TP+TN)/(TP+FP+TN+FN), where in our case the numbers of false positives (FP), false negatives (FN), true positives (TP), and true negatives (TN) correspond to

TP: sources correctly classified as suitable for LR methods;

FP: sources that should be visually inspected, but which the classifier deems suitable for LR;

TN: sources correctly classified as requiring visual inspection;

FN: sources that could be done by LR techniques but are being sent by the classifier to visual analysis.

When training and testing with a balanced number of examples in each category, accuracy shows the robustness of the classifier. For our binary classification on a balanced data set, the classifier returns a probability of the source being able to be accepted by LR (class 1), with the probability of being class 0 (requiring LGZ) being 1 minus this probability. 0.50 is the normal threshold value used to discriminate between the two, and it is the value we adopted when evaluating the results in this section and in Section 5. We do, however, investigate other thresholds in order to evaluate the model applied to an imbalanced data set in Section 6. When evaluating the results we are mainly concerned with minimizing the number of sources wrongly accepted through LR (FP), while keeping a low number of sources that need to be sent to visual analysis (FN and TN sources). That is another reason why we further investigate ‘threshold moving’ in order to establish more suitable cut-off probabilities.

We also analyse the values of recall [also known as sensitivity or true positive rate (TPR)] and precision for our two classes. Precision can be defined as the fraction of sources predicted as being from a certain class that are actually from that class [e.g. TP/(TP+FP)], and recall as the fraction of sources from a certain class that are predicted correctly [e.g. TP/(TP+FN)]. The overall balance between precision and recall for the different classes is given by the F1-score [2×(precision×recall)/(precision + recall)]. In Section 5, we also use the false positive rate (FPR) to illustrate the performance of the classifier. The FPR corresponds to the fraction of sources from class 0 that are incorrectly classified [FP/(FP+TN)].

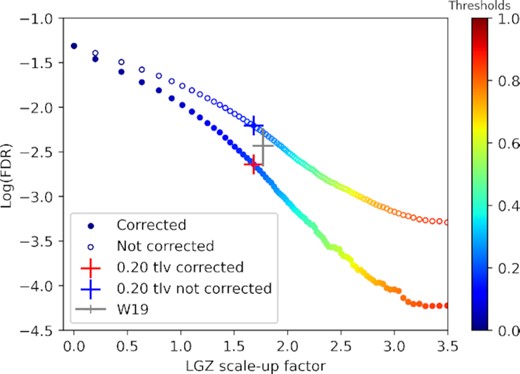

In our analysis, we further define the ‘LGZ scale-up factor’ that corresponds to the total number of sources that we would have to visually inspect scaled by the ones we should really inspect [(FN+TN)/(TN+FP)]. In other words, it represents the multiplicative factor of additional galaxies we would have to send to LGZ besides the ones that should be sent. We compare it with the false discovery rate [FDR = FP/(FP+TP)], which corresponds to the fraction of sources deemed to be suitable for cross-matching by LR that are classified incorrectly.

4.2 Baseline

In order to create a baseline model, we used tpot and a set of baseline features (BL) that contain only basic radio source information: pybdsf peak and total fluxes, major and minor axis sizes, and the logarithm of the number of Gaussians that compose each pybdsf source.

We ran tpot using a set of conservative parameters: three generations (number of iterations of the optimization process; see Appendix A for more details), a population size of 20 (number of candidate solutions tpot retains in each generation), and a 10-fold CV (number of data splits where each pipeline is trained and evaluated). This allows tpot to search for 600 different models in each run. The choice of the values for these parameters is subjective, and higher values would enable the search for more model combinations. However, running tpot for a larger number of generations and population size would drive tpot towards more complex ML pipelines with stacked models that could cause the model to overfit; this is a current challenge of the method (see Olson et al. 2016b for a discussion). Therefore, we define low values for the tpot parameters, and use it to get recommended pipelines. In that way, we select a simple model that provides interpretability for our experiments and we perform model optimization at a later stage.

We performed different tpot runs and we found a consistent selection of tree-based models as the favoured choice: using different balanced random samples of the full data set, tpot would select a GBC or occasionally an XGBoost (XGBoost is an optimized version of a GBC that can include regularization and allows further optimization due to the amount of parameters that can be tuned); when using subsets of the data (half-size data set) a Random Forest or an Extra Trees classifier was favoured. For all the models, we achieved an internal CV accuracy of around 89 per cent and a test accuracy within ±0.5 per cent of the CV value. The GBC achieved higher performance on the CV tests but the Random Forest models showed a higher generalization ability when training with only 50 per cent of the data set. This suggests that the smaller data set does not contain enough examples for tpot to detect strong patterns among the features and therefore it fits a model that performs well with higher variance data. This also indicates that the classification could benefit from adding more relevant features and could be improved using a GBC model (with optimized hyperparameters, such as a bigger ensemble size and/or a different learning rate). For our baseline model, we therefore select a GBC with 100 estimators and a learning rate of 0.01, which are also the hyperparameters suggested by tpot. The complete specifications of the baseline model can be seen in Table 4.

4.3 Feature selection

We started with the baseline model and investigated the impact of adding different sets of features (as described in Table 2) to the classifier; their impact on classification is illustrated in Table 3. These comprise four sets of features in addition to the (0) baseline features: (1) LR information of the pybdsf source; (2) properties of the Gaussian component with the highest LR value (or the brightest Gaussian if none have a LR match); (3) the nearest pybdsf neighbour information, and (4) the positions of the pybdsf sources on the SOM.

Accuracy on the test sets of the GBC model before and after optimization of the hyperparameters, and using cumulative sets of features: baseline features (BL), pybdsf source LR features (LR), pybdsf Gaussian features (GAUS), nearest neighbour (NN) and SOM features (SOM) as described in Table 2. In each case the GBC was run on 10 different undersamplings with random sampling of the dataset into training and test sets, and the mean of these 10 is quoted. The standard deviation between the 10 data sets is typically around 0.2 per cent.

| Set of features . | Accuracy achieved (per cent) . | |

|---|---|---|

| . | Baseline hyperparameters . | Optimised GBC . |

| (0) BL | 88.7 | 88.7 |

| (1) BL and LR | 90.2 | 90.2 |

| (2) 1 and GAUS | 90.3 | 90.3 |

| (3) 2 and NN | 94.4 | 94.6 |

| (4) 3 and SOM | 94.7 | 94.8 |

| Set of features . | Accuracy achieved (per cent) . | |

|---|---|---|

| . | Baseline hyperparameters . | Optimised GBC . |

| (0) BL | 88.7 | 88.7 |

| (1) BL and LR | 90.2 | 90.2 |

| (2) 1 and GAUS | 90.3 | 90.3 |

| (3) 2 and NN | 94.4 | 94.6 |

| (4) 3 and SOM | 94.7 | 94.8 |

Accuracy on the test sets of the GBC model before and after optimization of the hyperparameters, and using cumulative sets of features: baseline features (BL), pybdsf source LR features (LR), pybdsf Gaussian features (GAUS), nearest neighbour (NN) and SOM features (SOM) as described in Table 2. In each case the GBC was run on 10 different undersamplings with random sampling of the dataset into training and test sets, and the mean of these 10 is quoted. The standard deviation between the 10 data sets is typically around 0.2 per cent.

| Set of features . | Accuracy achieved (per cent) . | |

|---|---|---|

| . | Baseline hyperparameters . | Optimised GBC . |

| (0) BL | 88.7 | 88.7 |

| (1) BL and LR | 90.2 | 90.2 |

| (2) 1 and GAUS | 90.3 | 90.3 |

| (3) 2 and NN | 94.4 | 94.6 |

| (4) 3 and SOM | 94.7 | 94.8 |

| Set of features . | Accuracy achieved (per cent) . | |

|---|---|---|

| . | Baseline hyperparameters . | Optimised GBC . |

| (0) BL | 88.7 | 88.7 |

| (1) BL and LR | 90.2 | 90.2 |

| (2) 1 and GAUS | 90.3 | 90.3 |

| (3) 2 and NN | 94.4 | 94.6 |

| (4) 3 and SOM | 94.7 | 94.8 |

Source LR features: The addition of the LR features (LR value and LR distance) of the pybdsf source increases the performance accuracy of the baseline model by about 1.5 per cent (from 88.7 per cent to 90.2 per cent; see Table 3). This improvement is expected, as the presence or absence of a potential host galaxy at the expected position is a strong indicator of whether the source has been correctly associated.

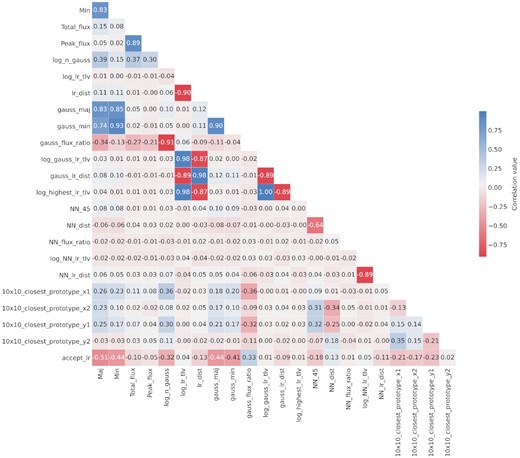

Gaussian (GAUS) features: The addition of the Gaussian features has a small impact on the model with only minor improvements for the classification. When adding these features to the Baseline features and the LR features, the improvement is 0.1 per cent. Fig. 2 shows the correlation between different input features (and with the resulting classification). It is evident from this plot that the flux ratio relative to the source and the size of the Gaussian (gaus|$\_$|min and gaus|$\_$|max) do show, respectively, a strong positive and strong negative correlation with the ‘accept|$\_$|lr’ output, and thus contain useful information. However, the sizes of the Gaussians show a very strong correlation with the sizes of the sources, the flux ratio between the Gaussian and the source is highly correlated (inversely) with the number of Gaussians that composed each pybdsf source, and the Gaussian LR features (log|$\_$|gauss|$\_$|lr|$\_$|tlv and gauss|$\_$|lr|$\_$|dist) are also highly correlated with the source LR parameters (not least because most sources are composed by single Gaussian components). Thus, the inclusion of the Gaussian features does not introduce much new information. Nevertheless, we include these features in our final model, as they are easily available and offer marginal improvement.

Correlation matrix using Pearson correlation. This shows the correlation coefficients between each of the different input features considered for the modelling (blue for positive linear correlation, red for negative linear correlation, scaling from 1 to -1). The bottom row provides the correlation of each parameter with the final ‘accept|$\_$|lr’ outcome, indicating the strength of any linear relation between the features and the target class.

Nearest neighbour (NN) features: Adding the NN information has the greatest impact on the model performance, improving the classification by more than 4 per cent. Even though there is not a strong linear correlation with the ‘accept|$\_$|lr’ output in Fig. 2, the NN|$\_$|dist, NN|$\_$|lr|$\_$|dist and log|$\_$|NN|$\_$|lr|$\_$|tlv, and the NN|$\_$|45 parameters provide valuable additional information for the classification, as does the flux ratio of the NN source relative to the source under consideration.

Self-organizing Map (SOM) features: Experiments using solely the baseline and the SOM features improves the classifier by about 2.5 per cent compared to the baseline only. Impressively, if using only the SOM as input features (not shown in Table 3), the model achieves a classification of almost 80 per cent, which demonstrates the power of the morphological representation for the classification. However, it also demonstrates that some essential information contained within the baseline features is not retrievable from the SOM alone.

The addition of the SOM features on top of all of the other different experiments improved the model accuracy by 0.3 per cent, to 94.7 per cent on the baseline model and 0.2 per cent on the final model. This indicates that the information encoded in the SOM, through a visual representation of the source (compact versus extended emission, single versus blended versus multiple radio component source, etc.) does provide some additional information over the other features. However, this is limited, due to the correlations between the SOM and other features as seen in Fig. 2. Due to the relatively small improvement, and because the SOM features come from an external source, we have decided to exclude the SOM from our final model.

Deconvolved features: We also investigated using the deconvolved (DC) major and minor axis instead of the measured values, and we found the same results. We ran the model using the DC and non-DC major and minor axis for both the pybdsf sources and the Gaussians and the differences were negligible. Baseline experiments replacing the measured sizes by the deconvolved sizes of the sources pointed to a small improvement on the classifier, but well within the range of the variance of the model. In our final model, we opted to use the non-deconvolved sizes as these are potentially more robust against inaccurately measured beam sizes; however, this choice is arbitrary and is not expected to have a significant effect on the classifier for LoTSS DR1.

4.4 Model optimization

4.4.1 Selection of model and model hyperparameters

After feature selection, we performed further experiments using tpot to optimize the model hyperparameters using a single data set. The hyperparameters are used to adjust the learning process (e.g. learning rate) and the model specifications (e.g. number of estimators, i.e. trees, on a tree-based model). We ran tpot for three generations with a population size of 5, and a cross-validation (CV) of 10 and the sets of features from Table 2 excluding the SOM. The range of values we defined for tpot to perform the search, and the optimized set of model hyperparameters for the GBC model finally selected, can be seen in Table 4.

GBC model hyperparameters: baseline, tuning values, and finally adopted optimized hyperparameters obtained by tpot optimization. The learning rate controls how quickly the loss is corrected at each iteration; no. of estimators corresponds to the number of sequential trees create by the model; max depth represents the maximum tree extension; subsample is the proportion of data used in each tree; min samples split corresponds to the minimum number of examples necessary to split a tree into different branches; min samples leaf is the minimum number of examples required in a terminal leaf; and max features is the maximum number of features to take into consideration while searching for the optimal split.

| Hyperparameters . | Baseline GBC . | Search values . | Optimized GBC . |

|---|---|---|---|

| Learning rate | 0.01 | 0.001, 0.01, 0.05, 0.1, 0.5, 1 | 0.01 |

| No. of estimators | 100 | 100, 250, 500, 1000 | 500 |

| Max depth | 10 | Range (1, 11, steps = 1) | 8 |

| Subsample | 0.75 | Range (0.05, 1.01, steps = 0.05) | 0.15 |

| Min samples split | 6 | Range (2, 21, steps = 1) | 12 |

| Min samples leaf | 10 | Range (1, 21, steps = 1) | 5 |

| Max features | 0.35 | Range (0.05, 1.01, steps = 0.05) | 0.6 |

| Hyperparameters . | Baseline GBC . | Search values . | Optimized GBC . |

|---|---|---|---|

| Learning rate | 0.01 | 0.001, 0.01, 0.05, 0.1, 0.5, 1 | 0.01 |

| No. of estimators | 100 | 100, 250, 500, 1000 | 500 |

| Max depth | 10 | Range (1, 11, steps = 1) | 8 |

| Subsample | 0.75 | Range (0.05, 1.01, steps = 0.05) | 0.15 |

| Min samples split | 6 | Range (2, 21, steps = 1) | 12 |

| Min samples leaf | 10 | Range (1, 21, steps = 1) | 5 |

| Max features | 0.35 | Range (0.05, 1.01, steps = 0.05) | 0.6 |

GBC model hyperparameters: baseline, tuning values, and finally adopted optimized hyperparameters obtained by tpot optimization. The learning rate controls how quickly the loss is corrected at each iteration; no. of estimators corresponds to the number of sequential trees create by the model; max depth represents the maximum tree extension; subsample is the proportion of data used in each tree; min samples split corresponds to the minimum number of examples necessary to split a tree into different branches; min samples leaf is the minimum number of examples required in a terminal leaf; and max features is the maximum number of features to take into consideration while searching for the optimal split.

| Hyperparameters . | Baseline GBC . | Search values . | Optimized GBC . |

|---|---|---|---|

| Learning rate | 0.01 | 0.001, 0.01, 0.05, 0.1, 0.5, 1 | 0.01 |

| No. of estimators | 100 | 100, 250, 500, 1000 | 500 |

| Max depth | 10 | Range (1, 11, steps = 1) | 8 |

| Subsample | 0.75 | Range (0.05, 1.01, steps = 0.05) | 0.15 |

| Min samples split | 6 | Range (2, 21, steps = 1) | 12 |

| Min samples leaf | 10 | Range (1, 21, steps = 1) | 5 |

| Max features | 0.35 | Range (0.05, 1.01, steps = 0.05) | 0.6 |

| Hyperparameters . | Baseline GBC . | Search values . | Optimized GBC . |

|---|---|---|---|

| Learning rate | 0.01 | 0.001, 0.01, 0.05, 0.1, 0.5, 1 | 0.01 |

| No. of estimators | 100 | 100, 250, 500, 1000 | 500 |

| Max depth | 10 | Range (1, 11, steps = 1) | 8 |

| Subsample | 0.75 | Range (0.05, 1.01, steps = 0.05) | 0.15 |

| Min samples split | 6 | Range (2, 21, steps = 1) | 12 |

| Min samples leaf | 10 | Range (1, 21, steps = 1) | 5 |

| Max features | 0.35 | Range (0.05, 1.01, steps = 0.05) | 0.6 |

Since there is some discussion in literature about boosting methods overfitting under certain circumstances (see Appendix A for references) we give special attention to check that the model we use does not overfit. Therefore, and for verification purposes, we tested different possible combinations of hyperparameters. Increasing the learning rate and increasing the number of estimators both make the model increase its accuracy; for example, for 1000 estimators the accuracy is able to reach values higher than 99 per cent on the training set and 94.8 per cent on the test set. However, a training set performance close to 100 per cent is a strong indication that the model is overfitting, especially with the significant difference in performance between the training and test sets (although the high accuracy on the test set shows the model is still able to generalize). tpot favours the use of 500 estimators, which offers good results and minimizes the risk of overfitting. Our optimized GBC model achieves an internal tpot CV score of 94.6 per cent and an average accuracy of 94.6 per cent on the test and 95.9 per cent on the training set.4 These are also the values obtained for the model trained and optimized using a single data set which we further use to present the results in the next section. This is within 0.2 per cent of the performance with 1000 estimators, but by using a smaller number of estimators we reduce the complexity of the model as well as training time, and can have higher confidence that the model is not overfitting.

We also investigated an XGBoost model, as this was also favoured by tpot. The best XGBoost model achieves an internal tpot CV score of 94.6 per cent and an average accuracy of 94.7 per cent on the test and 96.6 per cent on the training set. This is a marginally superior performance on the test set to the GBC model, but within the scatter of different data set selections, and also has a higher difference between test and training set performance. Given this, we opt to retain the less complex GBC model for our final analysis.

Overall, as can be seen from Tables 3 and 4, the hyperparameters and performance for the optimized GBC model are not dissimilar from those of the baseline model.

4.4.2 Training with re-sampling

To test whether under- or oversampling is a better approach, we applied the optimized classifier on the re-sampled data (see Section 3.3). Not surprisingly, we found that training the model with more examples of class 0 (even if they are synthetic) results in a higher precision for this class. Additionally, when compared to training without resampling, it results in a more proportional model performance across the two classes. This model reduces the number of sources that need to be visually inspected (the value of recall for class 1 increases), but this comes at the cost of accepting more sources for LR than should be (precision on class 0 decreases). This increase in the number of false positives is not in alignment with our science goals, as these sources will all remain incorrectly classified in the final analysis. The overall performance for the re-sampled data sets decreases by 0.7 per cent in accuracy on the test set, compared to the undersampling method, while the accuracy for the training set increases by 1.16 per cent. This difference is particularly evident for sources in class 1, for which the model got too specialized: it achieves 98.41 per cent precision on the training set, which does not allow it to generalize well on the test set for this class. This is the most probable reason why the model accepts too many false positive sources as suitable for LR analysis. We conclude that training with re-sampling leads to overfitting the classifier, and hence we opt for training the final classifier with undersampling instead.

5 MODEL PERFORMANCE AND INTERPRETATION

5.1 Final model performance

The model that we adopt in the rest of the paper is the GBC model with the optimized hyperparameters described in Table 4 and the 18 features (which exclude the SOM features) from Table 2, trained and tested on a balanced data set created with undersampling. In Table 5, we present the suite of metrics defined in Section 4.1 to assess the performance a binary classifier, in order to illustrate the overall performance of the model, as well as the performance on the different classes. The results presented here are run on an independent test set and adopt a standard cut-off probability of 50 per cent between the two classes.

Performance on the test and training sets: the results give the overall accuracy, and the F1-score, precision and recall for each class (where 1 = suitable for LR; 0 = requires LGZ), for a decision tree threshold of 0.50 or 50 per cent. The results quoted are for a single undersampled balanced data set.

| . | Test set . | Training set . |

|---|---|---|

| Accuracy | 0.946 | 0.959 |

| F1-score 1 | 0.945 | 0.958 |

| F1-score 0 | 0.947 | 0.960 |

| Precision 1 | 0.963 | 0.975 |

| Precision 0 | 0.930 | 0.944 |

| Recall 1 | 0.928 | 0.942 |

| Recall 0 | 0.964 | 0.976 |

| . | Test set . | Training set . |

|---|---|---|

| Accuracy | 0.946 | 0.959 |

| F1-score 1 | 0.945 | 0.958 |

| F1-score 0 | 0.947 | 0.960 |

| Precision 1 | 0.963 | 0.975 |

| Precision 0 | 0.930 | 0.944 |

| Recall 1 | 0.928 | 0.942 |

| Recall 0 | 0.964 | 0.976 |

Performance on the test and training sets: the results give the overall accuracy, and the F1-score, precision and recall for each class (where 1 = suitable for LR; 0 = requires LGZ), for a decision tree threshold of 0.50 or 50 per cent. The results quoted are for a single undersampled balanced data set.

| . | Test set . | Training set . |

|---|---|---|

| Accuracy | 0.946 | 0.959 |

| F1-score 1 | 0.945 | 0.958 |

| F1-score 0 | 0.947 | 0.960 |

| Precision 1 | 0.963 | 0.975 |

| Precision 0 | 0.930 | 0.944 |

| Recall 1 | 0.928 | 0.942 |

| Recall 0 | 0.964 | 0.976 |

| . | Test set . | Training set . |

|---|---|---|

| Accuracy | 0.946 | 0.959 |

| F1-score 1 | 0.945 | 0.958 |

| F1-score 0 | 0.947 | 0.960 |

| Precision 1 | 0.963 | 0.975 |

| Precision 0 | 0.930 | 0.944 |

| Recall 1 | 0.928 | 0.942 |

| Recall 0 | 0.964 | 0.976 |

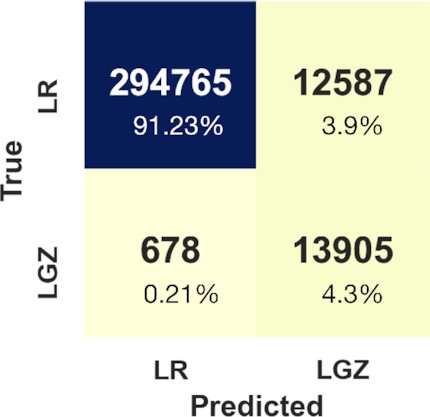

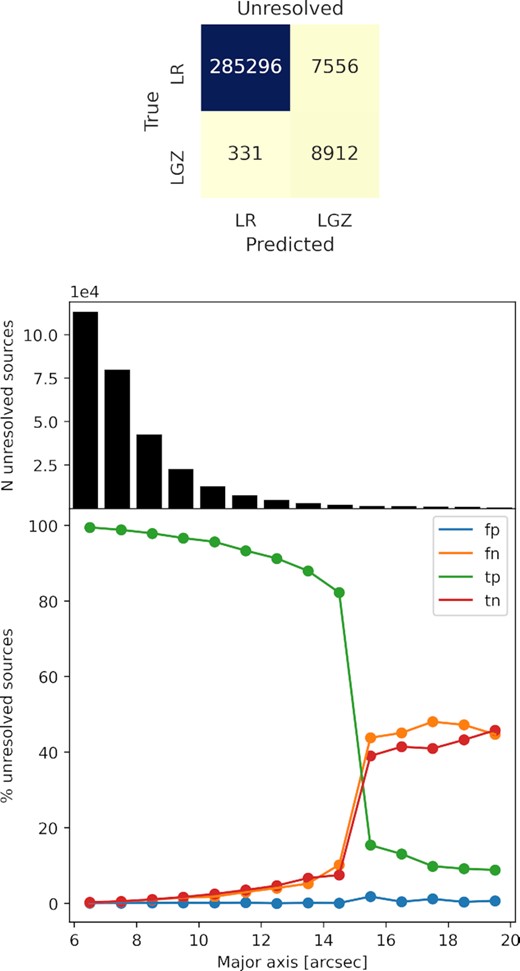

Our best model achieves an overall accuracy of 94.6 per cent on the test set, and just 1.3 per cent higher on the training set. The model can be seen to favour precision for class 1 (sources that can be cross-matched using LR) and recall for class 0 (sources that require visual inspection). These are the values we intend to optimize: while we want to avoid a high number of visual inspections it is more important to reduce the number of sources accepted as class 1 when they do not belong to that class. From the total number of sources accepted as being suitable for LR analysis, 96.3 per cent are actually from that class; similarly, 96.4 per cent of the sources that need to be visually inspected are sent to visual inspection. While this means that there is already a low percentage of sources wrongly predicted to be class 1, in practice the number that will end up being mis-classified is even smaller as some of these sources will be corrected during the LGZ process (see corrections applied in Section 6.2). The model yields slightly lower values of precision for class 0 and recall for class 1, meaning that the model sends more sources to visual inspection than needed. Overall, the classification predictions send around 7 per cent more sources (in a balanced data set) to visual inspection than needed to be inspected; this percentage will be significantly higher when applying the model to a highly imbalanced data set with many more sources in class 1.

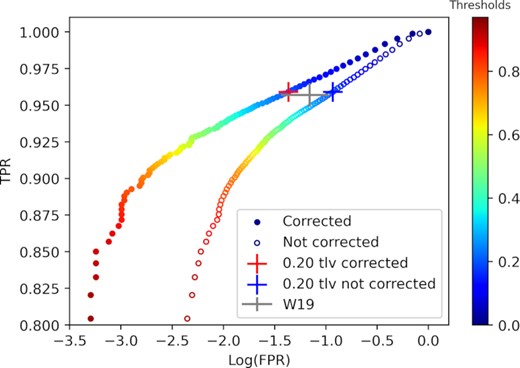

For illustration, we show in Fig. 3 the ROC curve of the model. This shows the true positive rate (TPR) against the false positive rate (FPR) plotted for different thresholds. The plot illustrates the performance of the model on detecting a source that can be processed by LR (i.e. a positive test) as we achieve values close to a TPR of 1 and FPR of 0; and an AUC (Area Under the Curve) for the test set of 0.98 (where an AUC of unity would correspond to the perfect classifier). Instead of using the default 0.50 threshold for balanced data sets, we can further explore a more suitable cut-off threshold closer to the top left corner of the curve, which is particularly important when dealing with imbalance data sets. We therefore explore the effect of varying the cut-off threshold in Section 6.1 in order to optimize the trade-off between the number of sources wrongly accepted as suitable for LR and the number of sources sent to visual inspection.

Receiver Operating Characteristic (ROC) curve of the optimized model for a training and test balanced data set, showing that this has an Area Under the Curve (AUC) close to unity, where 1 would be the value for a perfect classifier classifier. The true positive rate [TP/(TP + FN)] is the rate at which a source suitable to cross-match with LR is correctly identified as such out of all the ones that can be done using this method, while the false positive rate [FP/(TN + FP)] is the proportion of sources that are incorrectly predicted to be suitable to LR out of all the ones that require visual inspection.

5.2 Feature importance in the model

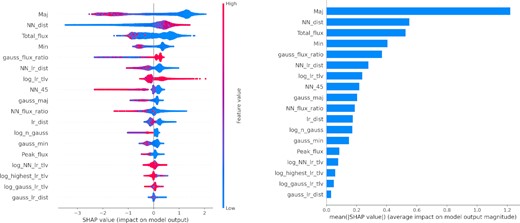

To interpret the importance of the different features for the classification, we use SHAP (SHapley Additive exPlanations; Lundberg & Lee 2017), through the use of a python package explicitly applied to tree-based ML models (Lundberg et al. 2020). The method measures the impact of different features on the model classification by averaging the contribution of a particular feature compared to when that feature is absent for the prediction.

The SHAP values are computed individually for each source in the training set, and the left-hand panel of Fig. 4 shows how the values of each feature contribute to the classification. SHAP values are given in units of log of odds, with positive SHAP values implying that the value of the feature favours class 1 sources and negative SHAP values implying that the feature value favours class 0 sources. The colour-coding on the plot indicates the value of the input feature compared to the range of values of that feature for all sources. Thus, for example, higher values of the major axis are associated with sources that have highly negative SHAP values (class 0), while lower major axis values favour class 1.

Left. SHAP values for each feature and for each source within the training set. The colour coding indicates the value of the feature for that source compared to the range of values for that feature across all sources, as indicated by the colour bar, and the thickness of the plot indicates the density of sources at a given SHAP value. Larger absolute SHAP values indicate higher impact in the prediction. Right: SHAP feature importance computed as the mean of the absolute SHAP values. These are ordered such that the features with the highest predictive power are at the top.

The right-hand panel of Fig. 4 shows the global contribution of the different features to the model predictions, in descending order. These correspond to the mean of the absolute SHAP values per feature across all the data on the training set. The features at the top of the plot are those with the highest predictive power: these are the major axis of the source followed by the distance to the source’s NN. The features towards the bottom of the plot provide the least predictive power of those considered in the model.

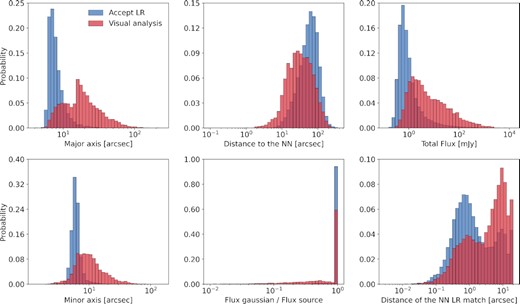

Fig. 5 shows the distribution of feature values for the six features picked out to have the highest predictive power. Specifically, it shows histograms of the distributions of feature values for the two classes of objects (class 0, class 1), each normalized to the total number of sources of that class. In each case a distinction between the two classes is apparent, and is in the direction which would be expected. Smaller sources (both major axis in the upper left and minor axis in the lower left) have a higher probability of having a correct cross-match by statistical means, as opposed to more extended sources, which are more likely to be resolved and possibly complex. Brighter sources (upper right) are also more likely to require visual analysis, due to the predominance of more extended AGNs at higher flux densities compared to more compact AGNs and SFGs at fainter flux densities (see discussion in W19). Sources for which the Gaussian component contains only a fraction of the total flux density and hence other Gaussian components must also be present, indicating an extended source, are also more likely to need visual analysis (lower middle panel), as compared to compact sources with all of their flux in a single Gaussian. Finally, those sources with a close near neighbour (upper middle panel), especially when that near neighbour does not have a close LR match (lower right-hand panel) are also indicative of multicomponent sources which require visual analysis.

Probability distributions of the most distinctive features, as identified by the SHAP analysis. In each case, blue corresponds to sources that are suitable for statistical match by LR (class 1) and red represents sources that require visual analysis (class 0). For all of these features, a systematic offset in feature values between class 1 and class 0 sources is apparent, in the direction that would be expected from the radio source properties (see the text for details).

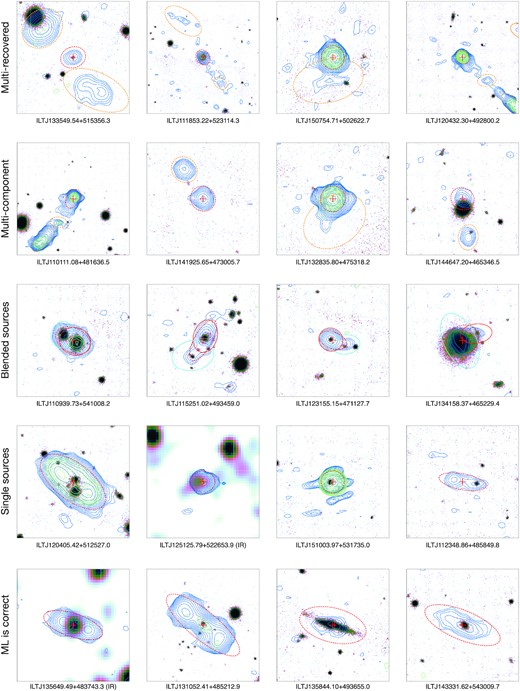

6 APPLICATION TO FULL LOTSS DATA SETS